In the past year or two, the business world has felt more like a bumpy ride than a smooth “transformation.” Employees are dealing with a lot of uncertainty—roles changing or getting eliminated entirely, teams shuffling, and rules shifting mid-game. But leaders aren’t operating from a crystal-clear blueprint either. Many are making big cuts, not just because AI speeds things up, but because they honestly can’t see what the company should look like long-term. So, they reduce costs and complexity first, then plan to rebuild smarter.

The tricky part is that AI isn’t a neat replacement for people or their jobs. It absolutely makes many tasks faster, but it also creates entirely new work. Think about customer support: many companies use chatbots to handle volume, but now someone has to watch performance, check logs to find problems, fine-tune the prompts and rules, and constantly improve the system. The work doesn’t disappear; it just moves and changes form.

Still, one advantage is unmistakable. For decades, the biggest hidden cost in any company was the Coordination Tax. You know the drill: an engineer builds a feature, then hands it to a product manager, who syncs with marketing, who waits for a business dev lead to find a partner. Every handoff is a friction point and every meeting is a tax on productivity. But now, a single “pod” of 5 to 10 people—comprising a mix of engineering, design, and growth talent—can now execute faster than a 50-person department.

2026 looks like the year that some of these trends become normal: smaller, multidisciplinary, autonomous feature teams, and flatter organizations where the middle-management role evolves from “traffic cop” to “coach + systems designer.”

Trend 1: Smaller, multidisciplinary “feature teams” become the default

As AI lifts individual capability, it becomes feasible to staff product work like a small startup.

Instead of a large, functionally segmented machine, you get teams of 5–10 people who can handle a feature from the initial idea all the way through building, shipping, and improving it. Speed shoots up because there is less coordination and fewer approvals needed. Quality rises because feedback loops tighten.

We can already see executives publicly describing this “tiny team” dynamic.

- Mark Zuckerberg recently noted that AI lets “a single very talented person” tackle projects that used to need “big teams,” and he’s actively pushing to “flatten teams.”

- Tobias Lütke, Shopify’s CEO, gave his teams a powerful signal: before asking for more people, you have to prove why you “cannot get what you want done using AI.” This directly asks them to think of AI as a part of their team that handles work autonomously.

- Duolingo’s CEO, Luis von Ahn, sees AI as a “platform shift” and is adjusting things like hiring and performance. They’re also reducing contractor work where AI can step in—another clear move toward “smaller teams that deliver much more.”

The common thread in these examples isn’t just “AI is useful.” It’s that they are rethinking the basic rules of how the company operates. The organization shifts from shuffling work between departments to empowering small, focused teams to fully own their results.

Ever watched a two-person team crank out something amazing over a weekend and thought, “How did they move so fast?” Now, imagine that kind of efficiency happening across dozens of teams, each one fully supported by AI.

That’s the emerging design pattern.

Trend 2: Flatter orgs and middle management under pressure

Once small, autonomous teams are successful, a second order effect kicks in: you just don’t need as many layers of management to keep them in sync. This is the point where the discussion about “flattening” an organization becomes very real, and uncomfortable.

Experts studying the workforce have been tracking “delayering” everywhere, not just in tech. Korn Ferry, for instance, talks about companies “thinning out their management midsections,” pointing out that middle managers were a big part of 2024 layoffs, a clear evidence that the traditional manager role is under structural pressure.

And major corporations are openly saying their restructures are about cutting bureaucracy. Amazon’s corporate layoffs, for instance, were reported as an effort to reduce organizational layers and operate more efficiently.

So, the pattern we should expect to continue seeing in 2026 isn’t a world with “no managers.” It’s a world with fewer managers whose fundamental job is completely different.

Which brings up an important, yet simple, question: If AI reduces coordination work, what exactly should managers coordinate?

The manager’s role is evolving

In most modern orgs, managers have been doing (at least) three different things:

- People development: coaching, feedback, growth, hiring, conflict navigation, culture

- Technical/project leadership: running execution, reviewing work, unblocking tasks, prioritizing

- Systems and strategy: setting guardrails, aligning across teams, shaping operating systems, long-term planning

AI and autonomous feature teams change the distribution of these responsibilities.

1) People development becomes more important, not less

As teams become more independent and things change faster, people really need grounding: clear direction, a safe space to work, ways to grow, and honest feedback. AI can help write a review but it can’t handle the human side of building trust, shaping identity, and finding meaning in our work.

2) Day-to-day technical leadership moves closer to the team

Here’s where the scope for many middle managers gets a little smaller. When you have a focused feature team, the day-to-day execution leadership often happens right within the group—think a senior engineer, the product and design leads, and a shared AI process. The manager’s job shifts away from being the daily air traffic controller.

3) Systems-level strategy becomes the manager’s differentiator

As pods proliferate, someone must design the system those pods operate within: how often do they operate, what are the quality rules, how do we control for risk, what are the portfolio priorities, and how do these teams talk to each other?

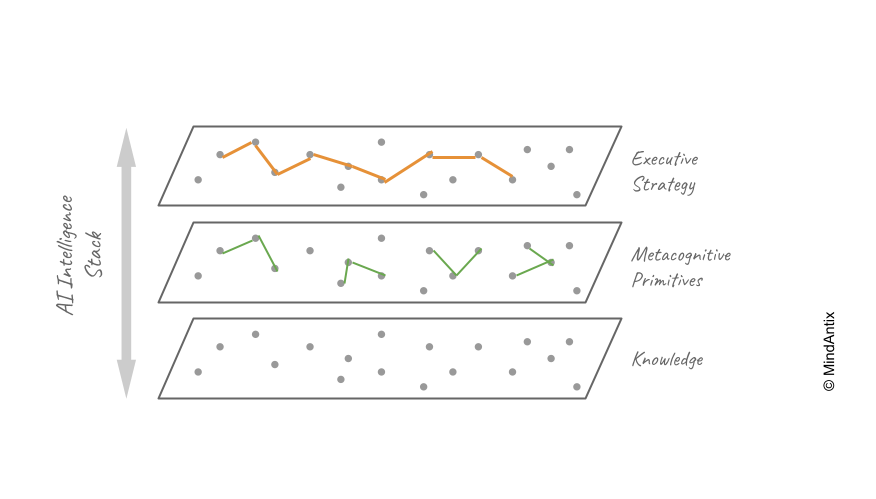

This is exactly the direction highlighted by McKinsey & Company in its writing on “agentic” organizations: we’ll see more M-shaped supervisors (broad generalists orchestrating agents and hybrid work across domains) alongside T-shaped experts (deep specialists safeguarding quality and exceptions).

As agents take on more execution, managers are freed up from admin tasks. Their focus is shifting toward leading people and orchestrating these blended systems. In other words, the ideal talent profile is changing. It’s less about being “the smartest technical person in the room” and much more about emotional intelligence and the ability to think strategically and connect the dots.

The emerging operating model

So what does this look like in practice? Expect more organizations to formalize patterns like:

Autonomous Feature Pods: Small teams of about five to ten people, each with a crystal-clear mission, like “improve customer sign-ups.” These teams are accountable for everything, from building to shipping to measuring success. They have all the necessary skills embedded right in the pod—product, design, engineering, and data. What makes them so fast? They use AI agents as a built-in assistant for everything from research and drafting to testing and analysis.

Thinner Management Layers: This shift also means a leaner leadership structure. You’ll see fewer layers of management. Instead, managers will take on a wider scope, focusing less on directing tasks and more on coaching and ensuring the system is running smoothly. We’ll also see more senior, non-managerial roles, like staff or principal engineers, who provide technical leadership without adding more hierarchy.

Guardrails over Gates: Finally, the way work is governed is changing from “approval-heavy” to “principle-driven.” Instead of waiting for sign-offs on every step, teams operate within clear “guardrails”—security protocols, data policies, quality standards, and ethical rules. This allows teams to move much faster and ship products without constant delays.

The trend for the rest of 2026 is clear: Organizations will continue to shrink in headcount but explode in impact. We are moving away from the “industrial” model of management, where people were cogs in a machine, toward a “biological” model, where small, autonomous cells work together to create a living, breathing, and highly adaptive organism.