Twelve year old Leo sat in his room, staring at his history assignment on the Industrial Revolution. Usually such an assignment would take several hours of reading relevant material, picking his thesis, finding supporting evidence to back his claims and then drafting his essay. But today, he simply fed a prompt into ChatGPT, made some quick and simple revisions, and hit submit.

On paper he looked like a star student. His essay even got him an ‘A’ from his teacher. But did any real learning take place?

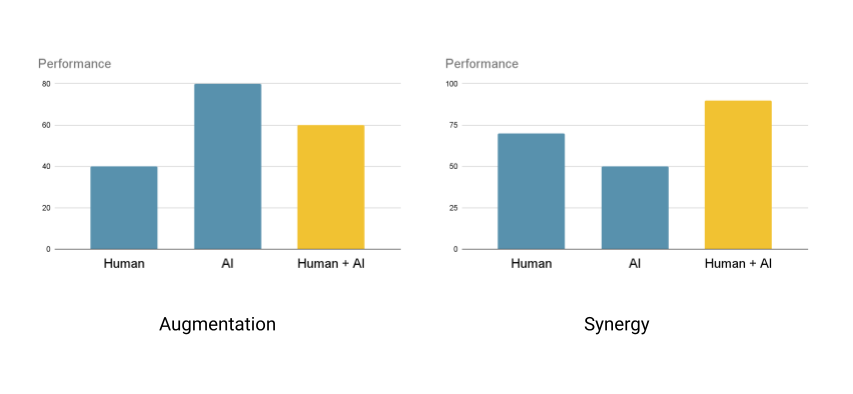

This is the crisis we face in education. We are currently obsessed with the “Human-in-the-Loop” model, where humans oversee AI outputs. But in a classroom, that model is backwards. If we want to raise a generation of innovators rather than mere prompt engineers, we have to flip the script to an “AI-in-the-Loop” approach that prioritizes the student’s cognitive effort before the algorithm ever enters the chat.

Renzulli’s Three Ring Conception of Giftedness

To understand why current AI integration is risky, we have to look at what actually creates high-level human performance. One of the most respected frameworks in educational psychology is Joseph Renzulli’s Three-Ring Conception of Giftedness.

Renzulli argued that “giftedness” (or what we might call high-level creative productivity) lies at the intersection of three distinct clusters of human traits:

- Above-Average Ability: The core competency and foundational knowledge in a specific domain.

- Creativity: The ability to generate original ideas, see new patterns, and think divergently.

- Task Commitment: The grit, perseverance, and “productive struggle” required to see a difficult project through to the end.

When these three rings overlap, giftedness emerges. However, the current “plug-and-play” integration of AI in schools threatens to thin out every one of these rings, potentially preventing students from achieving high accomplishments.

The Risk to Ability

The most immediate danger of AI is cognitive offloading—the tendency to use external tools to reduce the mental effort required for a task. While offloading is great for mundane chores (like GPS for driving), it is quite harmful for learning.

We already know that easy access to external information changes what people remember and how they allocate mental effort. The classic “Google effect” research showed that when people expect information to be accessible later, they are less likely to remember the information itself and more likely to remember where to find it. That isn’t necessarily bad. Humans have always used tools and transactive memory, but in schooling, ability is built through repeated retrieval, reconstruction, and sense-making.

Research has already begun to show the impact of cognitive offloading. In a large field experiment with high school math students, researchers found that a “ChatGPT-like” tutor improved performance during practice, but when the AI support was removed, those students performed worse than students who learned without the AI tool, an effect consistent with dependence and reduced durable learning.

Without guardrails, AI can act as a “cognitive crutch.” By providing hints and solving intermediate steps, it removes “desirable difficulty” or the kind of struggle that leads to deeper learning and long-term retention. If the AI does the heavy lifting, the students learn less and don’t reach higher levels of competence required for high achievements.

The Risk To Creativity

Creativity is not just producing something. It’s producing something both novel and appropriate.

AI is remarkably good at the obvious. Large language models generate outputs that reflect patterns in their training data. That makes them useful, fluent, and fast but it also means they can pull learners toward the statistical center of what’s been said before.

A recent experiment on creative writing found that access to AI ideas helped individuals produce stories judged as more creative (especially among less creative writers) but it also made the stories more similar to one another, reducing collective novelty. In other words, AI can raise the floor while lowering the ceiling of diversity.

Separate work has begun to quantify this “echo” effect more directly, showing measurable limits on plot diversity in LLM outputs under the same prompts. And broader research reviewing LLM creativity suggests that while models can appear creative through recombination, there are persistent questions about originality, intent, and the difference between pattern completion and human creative agency.

When students brainstorm with AI first, they often anchor on the suggestions they see and fall into an “associative rut.” If they took the time to think by themselves before reaching out to AI, they would discover more original and personally meaningful ideas.

And we’ve seen a similar phenomenon long before AI. Research on brainstorming has shown that nominal brainstorming (individual idea generation before group discussion) produces more ideas and more original ideas than purely interactive brainstorming. In the AI context, the LLM acts like a “dominant personality” in a group meeting. It speaks first, speaks confidently, and sets a baseline. Once a student sees those AI suggestions, their brain finds it incredibly difficult to think outside those parameters.

The Risk to Commitment

Task commitment includes persistence, delayed gratification, self-regulation, and the willingness to stay with ambiguity.

Modern technology has already been impacting this ring. Research shows that higher use of digital devices is tied to concentration difficulties, lower academic performance, and poorer self-regulation.

Now layer generative AI on top. In a world where a chatbot can produce instant essays and workable code, the emotional “cost” of effort feels high. Why wrestle with the challenge when the answer is right at the fingertips?

Emerging education research is also starting to map how generative AI intersects with self-regulated learning—highlighting both risks (over-reliance, reduced monitoring) and opportunities (scaffolds for planning, reflection, and feedback) depending on design and pedagogy. And survey-based findings have reported associations between ChatGPT use and procrastination or lower performance in some student samples, suggesting that without strong norms and supports, AI can drift from scaffold to shortcut.

Then there’s an additional twist: changing expectations. Once AI is available, teachers and workplaces may (implicitly or explicitly) expect faster output. But speed is not the same as depth. Many breakthroughs require a long dwell time. If we compress the timeline before students have built the inner muscles of persistence, we don’t get high performers.

The Way Out

In many AI discussions, “human in the loop” sounds reassuring: the human checks the AI’s work. But in education, that framing can be backward. It puts students in the role of evaluator rather than constructor, as if learning were mainly about spotting mistakes in someone else’s thinking.

Decades of learning science tell us that durable learning is constructive and interactive. The ICAP framework, for example, shows that learning activities that are Interactive and Constructive generally outperform merely Active or Passive engagement. Students learn more when they generate, explain, debate, and build meaning, rather than just consume or lightly manipulate information.

In education, we need a “Learning-First” model that prioritizes human cognition before algorithmic assistance. A sample 5-stage framework could look like this:

Phase 1 (Individual): students write an initial thesis, solution path, or set of ideas before using AI. This protects original cognition and forces retrieval, sense-making, and ownership.

Phase 2 (Group): students critique and build together. This is where misconceptions surface and learning becomes social where students learn from each other.

Phase 3 (AI): only then does AI enter as a gap-finder, alternative perspective generator, or a Socratic questioner. It reveals elements that students might have missed and stretches their thinking.

Phase 4 (Group): the group revises their solution based on the feedback from AI, synthesizing aspects that are reasonable and rejecting those that don’t fit well.

Phase 5 (Individual): individuals reconstruct the argument/solution in their own words because self-explanation is a reliable way to accelerate understanding.

This scaffolded approach protects each ring of Renzulli’s model. Ability is built through retrieval and reconstruction. Creativity is protected through first-thought originality and peer divergence. Task commitment is strengthened through social support and reflection.

Conclusion

Education has a choice to make. Without creating the right guardrails on how to use AI, we risk teaching the models instead of students.

The danger of a default “human-in-the-loop” stance in classrooms is that it casts students as editors of machine work. But learning isn’t editorial. Students must build mental models, connect ideas, and develop the internal fluency that only comes from doing the cognitive work themselves.

So the guiding question for AI in education should be, “Which phase of learning does this tool strengthen and which phase might it accidentally replace?”

If we stay student-first and learning-first, we’ll use AI the way every great teacher uses support: not to remove the mountain, but to help students become the kind of climbers who can scale it, long after the tool is gone.