Over the past year or so, AI experts, like Ilya Sutskever in his Neurips 2024 talk, have been raising concerns that AI reasoning might be hitting a wall. It seems that simply throwing more data and computing power at the problem is giving us less and less in return, and models are struggling with complex thinking tasks. Maybe it’s time to explore other facets of human reasoning and intelligence, rather than just relying on sheer computational force.

At its core, a key part of human intelligence is our ability to pick out just the right information from our memories to help us solve the problem at hand. For instance, imagine a toddler seeing a puppy in a park. If they’ve never encountered a puppy before, they might feel a bit scared or unsure. But if they’ve seen their friend playing with their puppy, or watched their neighbors’ dogs, they can draw on those experiences and decide to go ahead and pet the new puppy. As we get older, we start doing this for much more intricate situations – we take ideas from one area and apply them to another when the patterns fit. In essence, we have a vast collection of knowledge (made up of information and experiences), and to solve a problem, we first need to identify the useful subset of that knowledge.

Think of current large language models (LLMs) as having absorbed the entire knowledge base of human-created artifacts – text, images, code, and even elements of audio and video through transcripts. Because they’re essentially predictive engines trained to forecast the next word or “token,” they exhibit a basic level of reasoning that comes from the statistical structures within the data, rather than deliberate thought. What has been truly remarkable about LLMs is that this extensive “knowledge layer” is really good at exhibiting basic reasoning skills just by statistical prediction.

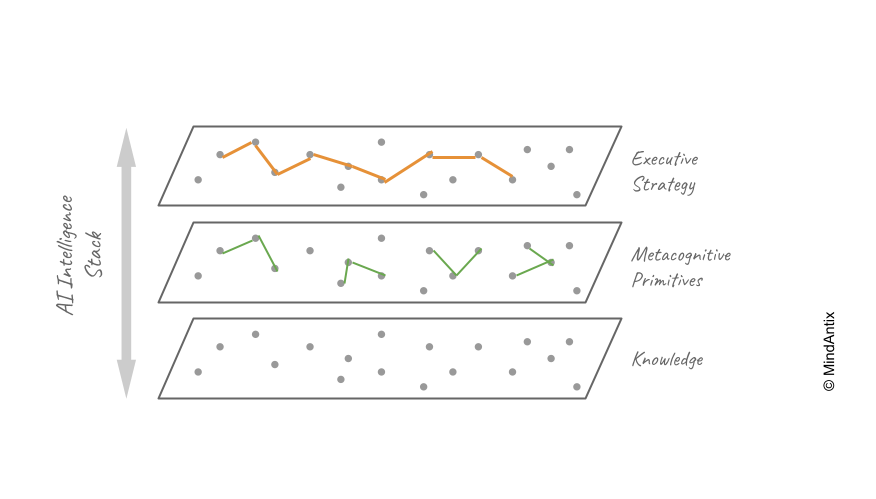

Beyond this statistical stage of reasoning, prompting techniques, like assigning a specific role to the LLM, improve reasoning abilities even more. Intuitively speaking, they work because they help the LLM focus on the more relevant parts of its network or data, which in turn enhances the quality of the information it uses. More advanced strategies, such as Chain-of-Thought or Tree-of-Thoughts prompting, mirror human reasoning by guiding the LLM to use a more structured, multi-step approach to traverse its knowledge bank in more efficient ways. One way to think about these strategies is as higher-level approaches that dictate how to proceed. A fitting name for this level might be the Executive Strategy Layer – this is where the planning, exploration, self-checking, and control policies reside, much like the executive network in human brains.

However, it seems current research might be missing another layer: a middle layer of metacognitive primitives. Think of these as simple, reusable patterns of thought that can be called upon and combined to boost reasoning, no matter the topic. You could imagine it this way: while the executive strategy layer helps an AI break down a task into smaller steps, the metacognitive primitive layer makes sure each of those mini-steps is solved in the smartest way possible. This layer might involve asking the AI to find similarities or differences between two ideas, move between different levels of abstraction, connect distant concepts, or even look for counter-examples. These strategies go beyond just statistical prediction and offer new ways of thinking that act as building blocks for more complex reasoning. It’s quite likely that building this layer of thinking will significantly improve what the Executive Strategy Layer can achieve.

To understand what these core metacognitive ideas might look like, it’s helpful to consider how we teach human intelligence. In schools, we don’t just teach facts; we also help students develop ways of thinking that they can use across many different subjects. For instance, Bloom’s revised taxonomy outlines levels of thinking, from simply remembering and understanding, all the way up to analyzing, evaluating, and creating. Similarly, Sternberg’s theory of successful intelligence combines analytical, creative, and practical abilities. Within each of these categories, there are simpler thought patterns. For example, smaller cognitive actions like “compare and contrast,” “change the level of abstraction,” or “find an analogy” play an important role in analytical and creative thinking.

The exact position of these thought patterns in a taxonomy is less important than making sure learners acquire these modes of thinking and can combine them in adaptable ways.

As an example, one primitive that is central to creative thinking is associative thinking — connecting two distant or unrelated concepts. In a study last year, we showed that by simply asking an LLM to incorporate a random concept, we could measurably increase the originality of its outputs across tasks like product design, storytelling, and marketing. In other words, by turning on a single primitive, we can actually change the kinds of ideas the model explores and make it more creative. We can make a similar argument for compare–contrast as a primitive that works across different subjects: by looking at important aspects and finding “surprising similarities or differences,” we might get better, more reasoned responses. As we standardize these kinds of primitives, we can combine them within higher-order strategies to achieve reasoning that is both more reliable and easier to understand.

In summary, giving today’s AI systems a metacognitive-primitives layer—positioned between the knowledge base and the Executive Strategy Layer—might provide a practical way to achieve stronger reasoning. The knowledge layer provides the content; the primitives layer supplies the cognitive moves; and the executive layer plans, sequences, and monitors those moves. This three-part structure mirrors how human expertise develops: it’s not just about knowing more, or only planning better, but about having the right units of thought to analyze, evaluate, and create across various situations. If we give LLMs explicit access to these units, we can expect improvements in their ability to generalize, self-correct, be creative, and be more transparent, moving them from simply predicting text toward truly adaptive intelligence.